AI/ML for Anomaly Detection and Reducing MTTR in Observability

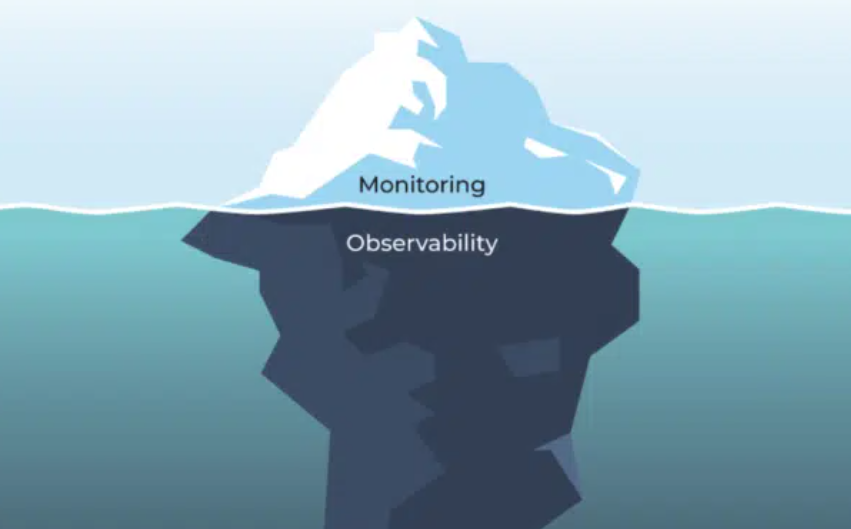

As software systems become increasingly complex, managing them becomes more challenging. Complex systems require a comprehensive observability approach that can collect, correlate, and analyze different types of data. The emergence of AI/ML technologies, combined with observability, presents a powerful opportunity to detect anomalies and improve Mean Time To Resolution (MTTR).

Anomaly detection is one of the critical use cases where AI/ML with observability plays a vital role. Instead of relying on manual analysis, AI/ML algorithms can help identify anomalies in vast data sets by identifying patterns that indicate deviations from normal behavior. This post will discuss some of the key ways that observability and AI/ML technologies can reduce MTTR through actionable recommendations, highlighting the challenges and best practices.

Anomaly Detection: Challenges and Solutions

The main challenge with anomaly detection is the sheer volume of data generated by modern software systems. Traditional monitoring tools generate large volumes of data, making it challenging to find patterns that indicate anomalous behavior. This is where AI/ML-based anomaly detection comes in. Machine learning algorithms can analyze vast amounts of data to identify patterns indicative of anomalous behavior. These tools can automatically categorize issues and predict system behavior, allowing teams to take corrective action before issues impact users.

Some of the key benefits of AI/ML-based anomaly detection include:

Improved accuracy: AI/ML-based anomaly detection can identify patterns that may not be obvious to human operators. This results in more accurate and reliable detection of anomalies.

Faster resolution: With AI/ML-based anomaly detection, teams can identify issues before they impact users, thus reducing MTTR.

Automation: AI/ML-based anomaly detection can help automate many tasks related to monitoring and troubleshooting systems.

Predictive analytics: With AI/ML-based anomaly detection, teams can predict system behavior and take proactive measures to prevent issues.

Best Practices for Enabling AI/ML for Anomaly Detection

To enable AI/ML for anomaly detection, teams can follow these best practices:

Collect diverse data: The more diverse the data collected, the more effective AI/ML-based anomaly detection will be. Teams should ensure they collect data from multiple sources, such as logs, metrics, and traces, to ensure they have a comprehensive view of their systems.

Establish baselines: Before anomaly detection can be effective, teams need to establish baselines for normal system behavior. Baselines can be established by analyzing historical data.

Choose the right algorithms: There are many types of AI/ML algorithms available for anomaly detection. Teams should choose the algorithm that is appropriate for their specific use case.

Constantly refine models: Models need to be continually refined to ensure accuracy. Teams should periodically review and update models to ensure they remain effective.

Trends in AI/ML for Observability

As observability continues to evolve, there are several trends in AI/ML that teams can adopt to enhance their anomaly detection capabilities. Here are some of the trends in AI/ML for observability worth considering:

Auto-remediation: Auto-remediation is a trend in AI/ML for observability that involves using intelligent automation tools to diagnose and fix issues automatically.

Explainable AI: Explainable AI refers to AI/ML models that can explain why they made a particular decision. This can help build trust in these models and make it easier for teams to take corrective action based on their recommendations.

Real-time monitoring: Real-time monitoring is a trend in AI/ML for observability that involves analyzing large volumes of streaming data in real-time. This allows teams to detect anomalies quickly and take corrective action before issues escalate.

Let me provide a detailed step-by-step example of how to implement an AI/ML-based anomaly detection system using Python, Elasticsearch, and Kibana.

Step 1: Setting up the environment

In this example, we’ll be using Python as the primary programming language. Before we begin, we need to make sure that the following tools are installed on our system:

Python 3.x

Elasticsearch

Kibana

Once you have installed these tools, you can proceed with the following steps:

Step 2: Collect data

We need data to train our model. In this example, we’ll be using the Numenta Anomaly Benchmark (NAB) dataset. NAB is a benchmark for evaluating algorithms for anomaly detection in real-world scenarios, created by Numenta. You can download the data from the following link:

https://github.com/numenta/NAB/tree/master/data

After downloading the data, we’ll be using Python to preprocess and train the data.

Step 3: Preprocessing data

Before we train our anomaly detection model, we need to preprocess and clean the data. This typically involves removing missing data, scaling the data, and selecting relevant features.

Here is an example of how to read the data from a CSV file, preprocess it, and store it in Elasticsearch:

from elasticsearch import Elasticsearch

import csv

# Connect to Elasticsearch

es = Elasticsearch()

# Read the data from a CSV file

data = []

with open('machine_temperature_system_failure.csv', 'rt') as csvfile:

reader = csv.reader(csvfile, delimiter=',')

next(reader, None) # skip the header row

for row in reader:

data.append({

'timestamp': row[0],

'value': row[1]

})

# Store the data in Elasticsearch

for i in range(len(data)):

es.index(index="anomaly", id=i, body=data[i])

We’re using the Python Elasticsearch library to communicate with Elasticsearch. The code reads data from a CSV file, removes the header row, and inserts the data into Elasticsearch. The data is stored in an index called “anomaly.”

Step 4: Training the model

Once the data is loaded into Elasticsearch, we can begin the process of training our anomaly detection model. In this example, we’ll use Elasticsearch’s built-in machine learning capabilities to do this.

Here is an example of how to train an anomaly detection model using Elasticsearch:

import time

# Train the model

config = {

"analysis_config": {

"bucket_span": "5m",

"detectors": [

{

"detector_description": "Count",

"function": "count",

"detector_index": 0

},

{

"detector_description": "Mean temperature",

"field_name": "value",

"function": "mean",

"detector_index": 1

}

],

"influencers": [

"timestamp"

]

},

"data_description": {

"time_field": "timestamp",

"time_format": "YYYY-MM-DD HH:mm:ss",

"field_delimiter": ",",

"quote_character": "\"",

"ignore_empty_lines": true,

"has_header_row": false

},

"model_snapshot_retention_days": 1,

"model_snapshot_exclude_fields": [],

"cold_start": {

"enabled": True

}

}

# Start the job

es.ml.start_datafeed(

datafeed_id='anomaly_detection',

index=['anomaly'],

query='{ "query": {"match_all": {}}}',

frequency='5m',

scroll_size=1000

)

time.sleep(5)

es.ml.start_job(job_id='anomaly_detection_job', body=config, wait_for_completion=True)

Here, we’re training an anomaly detection model using the Elasticsearch machine learning API. The code starts an Elasticsearch data feed that reads data from the “anomaly” index, and then starts a job to train the model.

Step 5: Visualizing results in Kibana

After training the model, we can visualize the anomalies in Kibana. Here’s an example of how to do this:

Open Kibana in your browser and go to the “Machine Learning” tab.

Under “Jobs,” select the “Anomaly Detection Job” that we created in Step 4.

Under “View Results,” you can see the anomalies detected by the model.

You can also create a dashboard in Kibana to visualize the results in a more user-friendly way.

And that’s it! We have successfully implemented an AI/ML-based anomaly detection system using Python, Elasticsearch, and Kibana. This example provides a high-level overview of the implementation process, and there may be additional steps or details specific to your use case that you need to consider.

with New Relic, GCO, and DataDog:

New Relic: New Relic can be used to implement AI/ML for anomaly detection and reducing MTTR in observability by using the following features:

Applied Intelligence: This feature uses machine learning algorithms to identify patterns and anomalies in data and provide actionable insights to reduce MTTR.

New Relic AI: This feature uses machine learning to analyze data from multiple sources and provide insights into application performance issues and potential root causes.

NRQL Alerting: This feature allows users to create custom alerts based on metrics and events, which can help detect anomalies and reduce MTTR.

Google Cloud Operations (GCO): GCO can be used to implement AI/ML for anomaly detection and reducing MTTR in observability by using the following features:

Anomaly Detection: This feature uses machine learning to detect anomalies in metrics data and alert users to issues that require attention.

Alerting and Notification: This feature provides real-time alerting with customizable notification channels to help reduce MTTR.

Datadog: Datadog can be used to implement AI/ML for anomaly detection and reducing MTTR in observability by using the following features:

Anomaly Detection: This feature uses machine learning to identify and alert users to anomalies in metrics and event data.

Alerting: This feature allows users to set custom alerts based on specific metrics and events to quickly identify and resolve issues, thereby reducing MTTR.

Predictive Analytics: This feature uses machine learning to forecast future metrics and alert users to potential issues before they occur, thereby reducing MTTR.

Conclusion

Observability with AI/ML plays a critical role in anomaly detection and actionable recommendations to reduce MTTR. By leveraging AI/ML, teams can identify patterns indicative of anomalous behavior in vast data sets, enabling them to take corrective action before issues impact users. However, implementing AI/ML for observability presents some challenges, including processing large volumes of data and using the right algorithms. By following best practices, teams can ensure their anomaly detection capabilities are effective, and by adopting emerging trends in AI/ML, they can stay ahead of the curve and improve their ability to detect anomalies proactively.